- #Install spark on windows 10 without hadoop install

- #Install spark on windows 10 without hadoop update

- #Install spark on windows 10 without hadoop code

- #Install spark on windows 10 without hadoop download

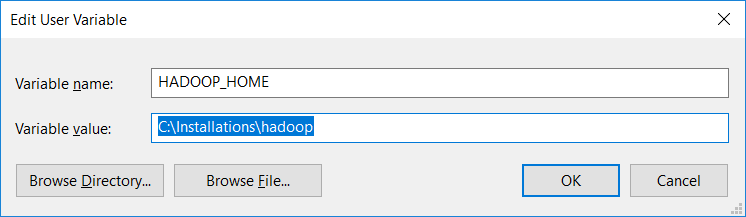

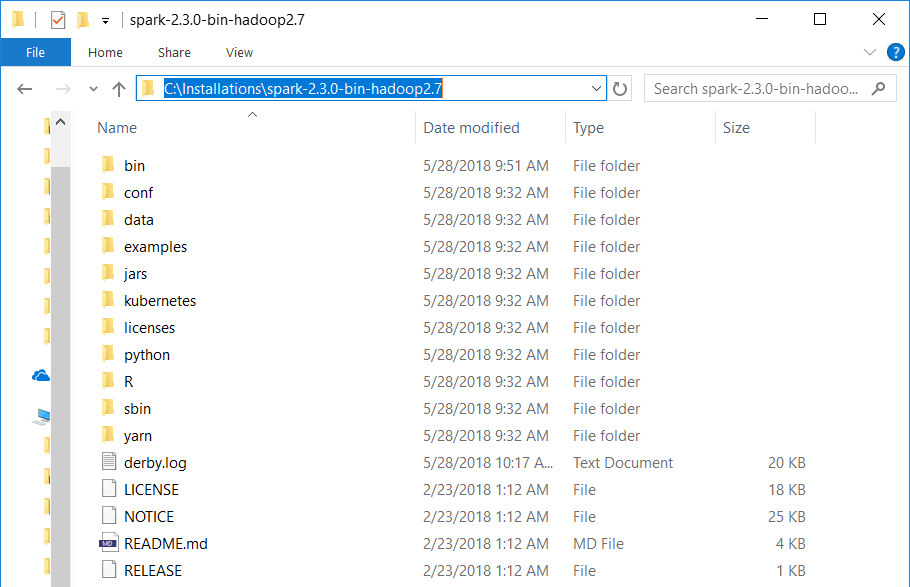

Also, with the new Oracle licensing structure (2019+), you may need to create an Oracle account to download Java 8. Please try with Java 8 if you're having issues. I can't guarantee that this guide works with newer versions of Java. Spark seems to have trouble working with newer versions of Java, so I'm sticking with Java 8 for now: The first step is to download Java, Hadoop, and Spark.

#Install spark on windows 10 without hadoop update

Update : Software version numbers have been updated and the text has been clarified.

#Install spark on windows 10 without hadoop install

I've documented here, step-by-step, how I managed to install and run this pair of Apache products directly in the Windows cmd prompt, without any need for Linux emulation. We recently got a big new server at work to run Hadoop and Spark (H/S) on for a proof-of-concept test of some software we're writing for the biopharmaceutical industry and I hit a few snags while trying to get H/S up and running on Windows Server 2016 / Windows 10. Current permissions are: rw-rw-rw-Īt .ql.(SessionState.java:612)Īt .ql.(SessionState.java:554)Īt .ql.(SessionState.Installing and Running Hadoop and Spark on Windows Current permissions are: rw-rw-rw-Īt .ql.(SessionState.java:522)Īt .(HiveClientImpl.scala:180)Īt .(HiveClientImpl.scala:114)Īt 0(Native Method)Īt (NativeConstructorAccessorImpl.java:62)Īt (DelegatingConstructorAccessorImpl.java:45)Īt .newInstance(Constructor.java:423)Īt .(IsolatedClientLoader.scala:264)Īt .hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:385)Īt .hive.HiveUtils$.newClientForMetadata(HiveUtils.scala:287)Īt .$lzycompute(HiveExternalCatalog.scala:66)Īt .(HiveExternalCatalog.scala:65)Īt .hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply$mcZ$sp(HiveExternalCatalog.scala:195)Īt .hive.HiveExternalCatalog$$anonfun$databaseExists$1.apply(HiveExternalCatalog.scala:195)Īt .(HiveExternalCatalog.scala:97)Ĭaused by: : The root scratch dir: /tmp/hive on HDFS should be writable. Current permissions are: rw-rw-rw- Īt .(HiveExternalCatalog.scala:106)Īt .(HiveExternalCatalog.scala:194)Īt .$lzycompute(SharedState.scala:114)Īt .(SharedState.scala:102)Īt .(HiveSessionStateBuilder.scala:39)Īt .$lzycompute(HiveSessionStateBuilder.scala:54)Īt .(HiveSessionStateBuilder.scala:52)Īt .hive.HiveSessionStateBuilder$$anon$1.(HiveSessionStateBuilder.scala:69)Īt .(HiveSessionStateBuilder.scala:69)Īt .internal.BaseSessionStateBuilder$$anonfun$build$2.apply(BaseSessionStateBuilder.scala:293)Īt .$lzycompute(SessionState.scala:79)Īt .(SessionState.scala:79)Īt .$lzycompute(QueryExecution.scala:57)Īt .(QueryExecution.scala:55)Īt .(QueryExecution.scala:47)Īt .Dataset$.ofRows(Dataset.scala:74)Īt .SparkSession.internalCreateDataFrame(SparkSession.scala:577)Īt .SparkSession.applySchemaToPythonRDD(SparkSession.scala:752)Īt .SparkSession.applySchemaToPythonRDD(SparkSession.scala:737)Īt 0(Native Method)Īt (NativeMethodAccessorImpl.java:62)Īt (DelegatingMethodAccessorImpl.java:43)Īt .invoke(Method.java:498)Īt (MethodInvoker.java:244)Īt (ReflectionEngine.java:357)Īt (AbstractCommand.java:132)Īt (CallCommand.java:79)Īt py4j.Gatewa圜n(Gatewa圜onnection.java:238)Ĭaused by: : : The root scratch dir: /tmp/hive on HDFS should be writable.

: .AnalysisException: : : The root scratch dir: /tmp/hive on HDFS should be writable. Py4JJavaError: An error occurred while calling o24.applySchemaToPythonRDD. ~\spark\python\lib\py4j-0.10.7-src.zip\py4j\protocol.py in get_return_value(answer, gateway_client, target_id, name)ģ27 "An error occurred while calling. ~\spark\python\pyspark\sql\utils.py in deco(*a, **kw)Ħ4 except 4JJavaError as e: Which results in the error: Py4JJavaError Traceback (most recent call last)

Based on my research I am fairly certain this has to do with my installation.

#Install spark on windows 10 without hadoop code

However, the following code keeps breaking. When I run pyspark in the terminal, Jupyter starts fine and runs code.

0 kommentar(er)

0 kommentar(er)